World-Building in Oz with Generative AI

What I Accomplished

I generated a rich tapestry of cinematic imagery inspired by The Wizard of Oz using Midjourney (to generate images) and Pikabot (to generate animation).

I developed image prompts using a vocabulary of visual culture spanning from enlightenment-era oil painting to 20th century photography and film-making.

I developed animation prompts using creative prose and action-oriented language in addition to references to visual culture.

I deepened my practical knowledge of generative-AI: how models function, how models are trained, and what their strengths and limitations are.

Toggle Between the Tabs

Introduction

Over the last several years, companies including OpenAI, Google, DeepSeek and others have entered the AI race, releasing consumer-facing products that make use of generative models.

Throughout this initial phase of generative-AI products hitting the global market, I was beginning creative work on written sequels to the classic fairytale, The Wonderful Wizard of Oz (1990). A longtime childhood fascination with Oz recaptured my imagination, and I became absorbed in writing and illustrating.

I recognized the potential for realizing my creative vision in high-fidelity using generative-AI, and so my experiments began…

Process

Prompt Development

Syntax and Structure

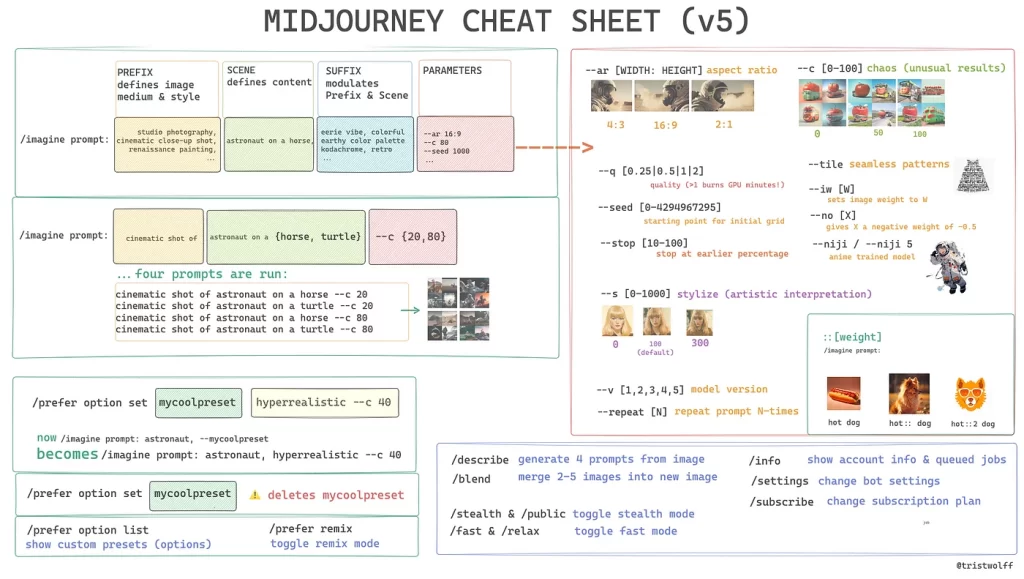

Midjourney and other generative tools require the use of specific syntaxes and prompt structure in order to achieve the best results for the given model. My generations significantly improved after I began to employ these structures and syntaxes; however, they were never a guarantee that a given prompt would succeed on the first pass.

Example of a cheat sheet that users can refer to in order to familiarize themselves with the preferred structure and syntax of Midjourney’s prompt function.

Each generative product has a unique suggestion for structure and syntax that will lead to the best results for that product.

Prompt Development

Vocabulary of Visual Culture

I made use of my knowledge of art history and visual culture to make precise references to periods, styles, specific artists and specific work

Toggle Between Inspiration/Generation

Layering elements—

(a)the shape of a jellyfish, and

(b)the quality of movement of a jellyfish, with the

(c)textural quality, and

(d)environmental context of a cloud

—produced a series of phantasmagoria from some Art Basel of the future.

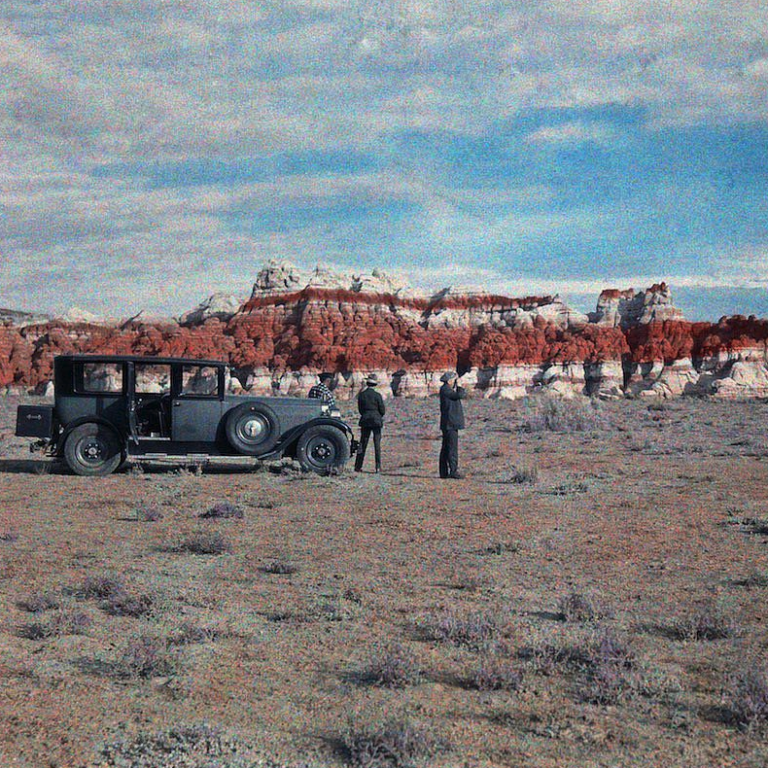

The text of the prompt made reference to pastoral oil painting from the 18th-20th centuries, as well as early colour photographs known as autochromes to produce these alien phenomena.

In addition to producing the desired “green tornado”, I was able to generate numerous variations that interpreted the creative prompt in wildly different ways.

Prompt Development

Images as References

Midjourney and other gen-AI tools allow the use of images as references within the body of the prompt.

The author can steer the prompt to reflect elements from multiple image references in a kind of fusion, combination or juxtaposition that blends with the text direction of the prompt.

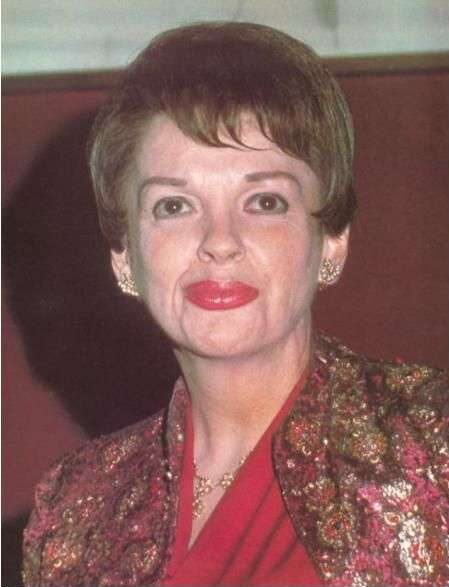

Creating a representation of an individual with an uncommon or unconventional skin tone was surprisingly challenging; this was the first real instance where I developed a concept using images as references within the body of the prompt.

Prompt Development

Variations

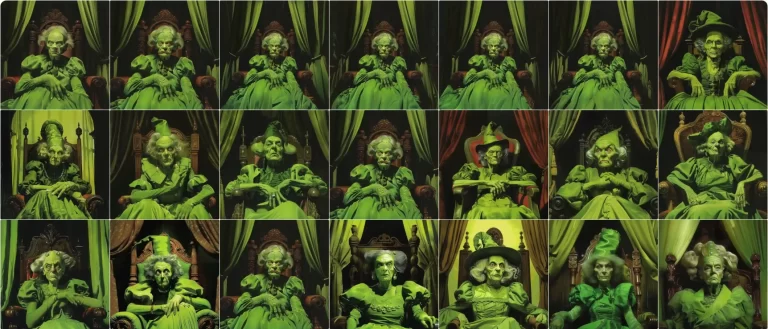

It’s possible to produce an enormous amount of variety with generative image-making tools.

By deriving variations, the user creates a rootlike or branchlike map of generative outputs as they steer the model towards a desired outcome.

In many instances, it was through execution of experimental variation that I arrived at the most impactful imagery to my creative eye.

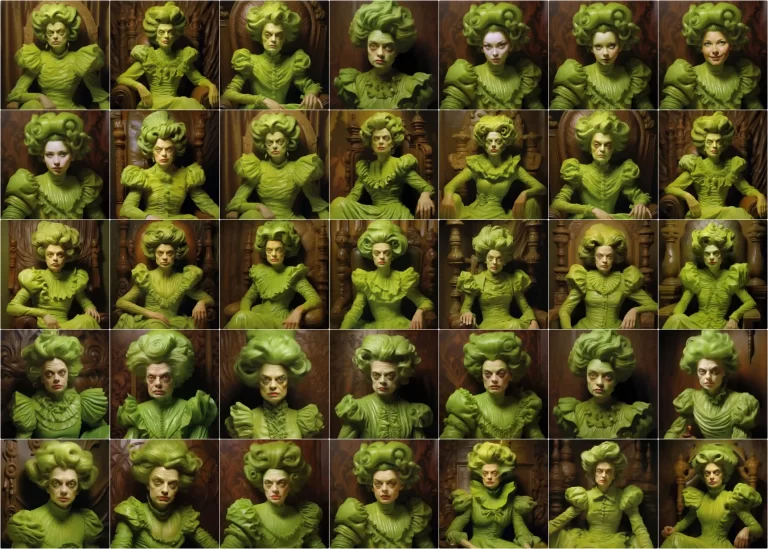

An example of the abundance possible with variations; the final image was produced over a series of working sessions, where the prompt was adjusted or completely re-written multiple times.

More Examples

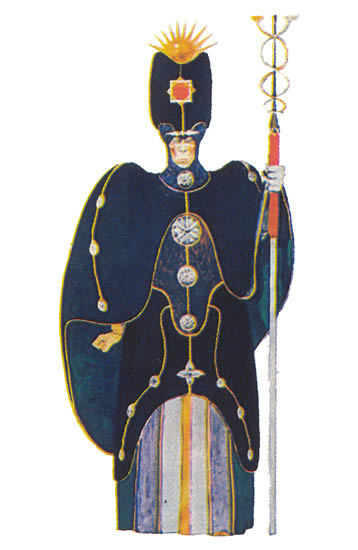

The Cowardly Lion, in the style of Matthew Barney

Matthew Barney is a contemporary cinema artist who uses high-tech film cameras and cinema-grade prosthetics to build the surreal and provocative worlds of his expansive film series, Cremaster Cycle(1-5); the production of the complete work spans multiple decades.

Using the syntax “in the style of” generated representations of the humanoid Cowardly Lion from MGM’s 1939 Wizard of Oz musical with visual stylings reminiscent of Matthew Barney’s cinematography-driven artistic works.

Midjourney did an exceptional job of capturing the essential visual flavour of both the Cowardly Lion as well as Matthew Barney’s work overall.

The Winged Monkeys

I found these images to be particularly moving; the characters feel imbued with layers of personality and a sorrowful charm, challenging the notion that generative-AI will always produce some experience of the uncanny valley within the viewer—even when the hand of AI is most evident.

It’s an example of imperfect generation not necessarily distracting from the innate beauty of a generated image. These generations in particular seemed to beg the question to me: can, or should, these images be considered valid artistic expressions?

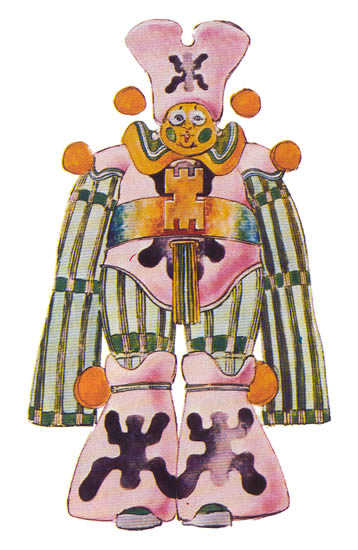

Countryfolk and Magical Species of Oz

These images are an example of mass variation that occurred once a desired style was achieved; the initial successful variation resulted in an abundance of unique characterizations in the same distinct style and colour scheme, but with layered variety in terms of unique and nuanced physical characteristics.

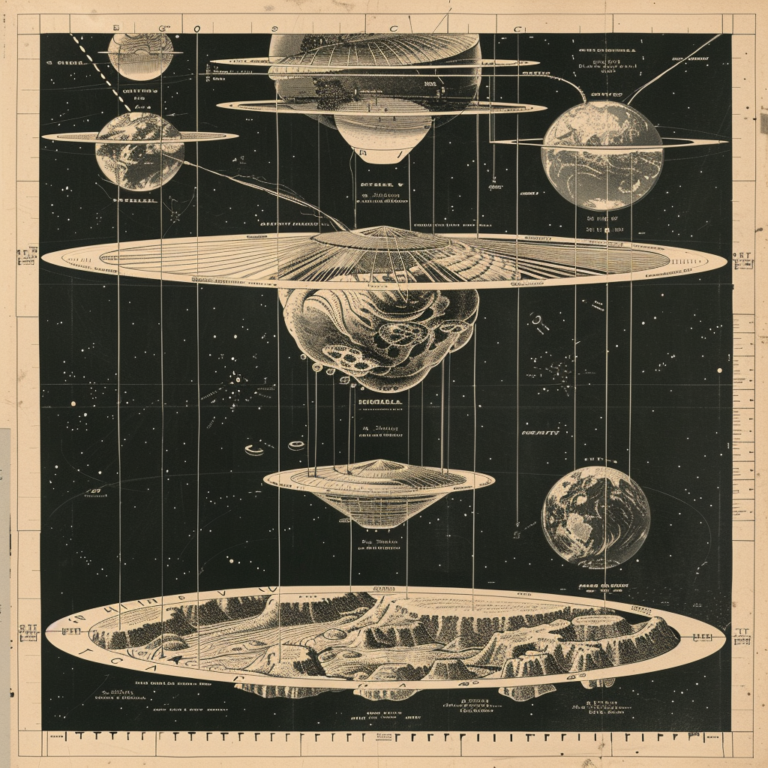

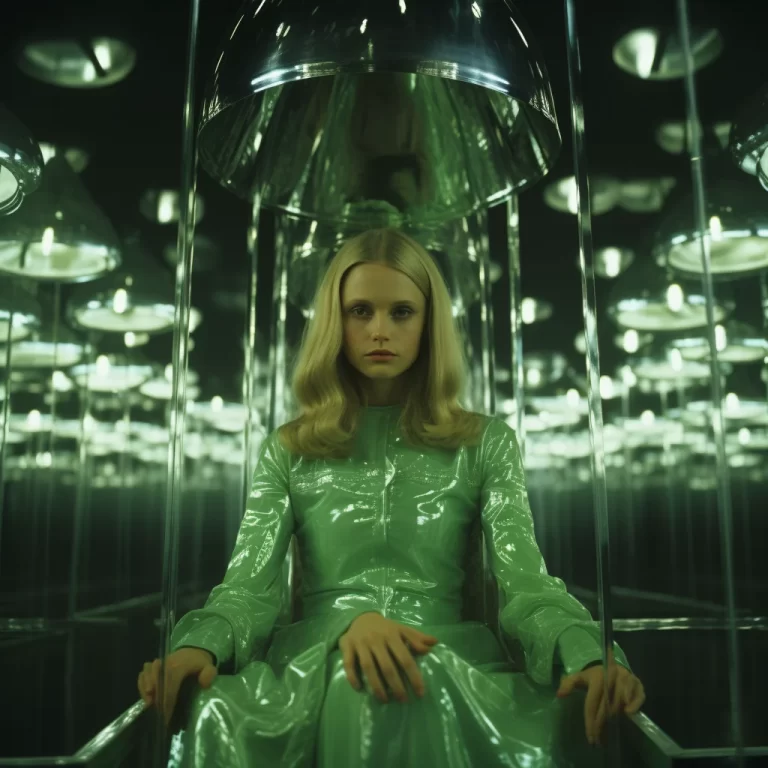

The Distant Future of Oz

My writing is largely inspired by science fiction from the 1960s to the 1990s. Key inspirations include Alice Bradley Sheldon (who wrote under the pseudonym James Tiptree Jr.) and Larry Niven (author of the mind-bending sci-fi classic, Ringworld (1970)).

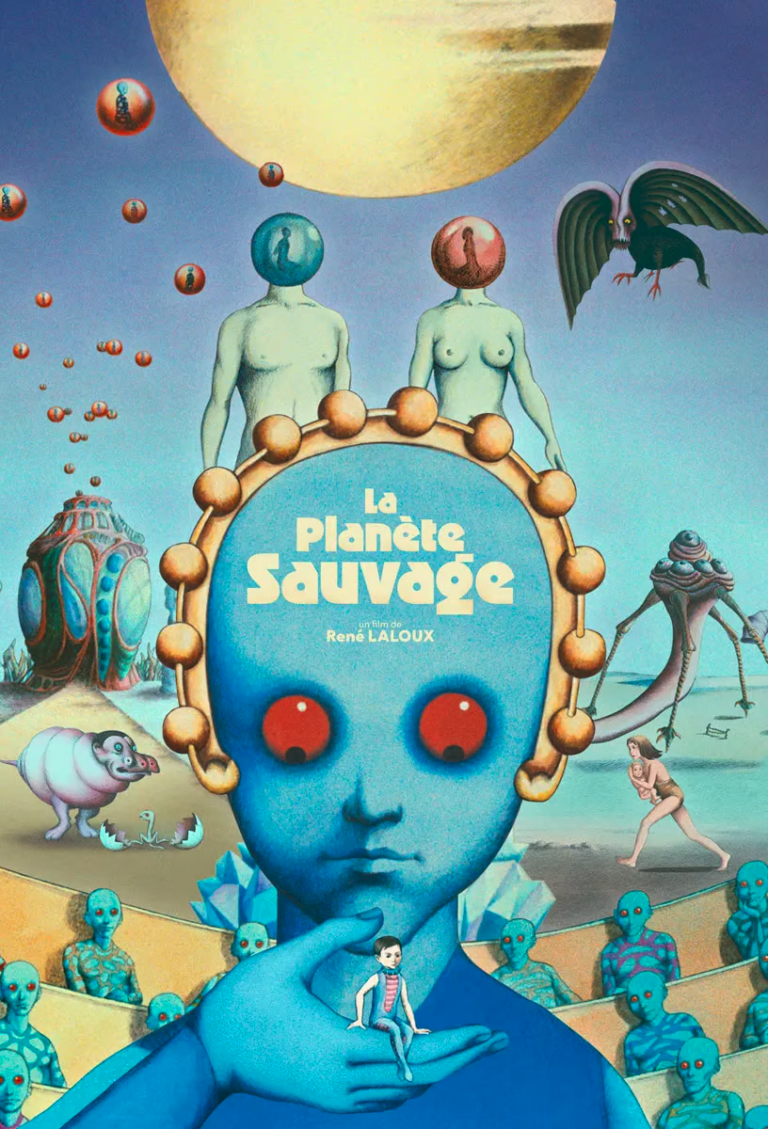

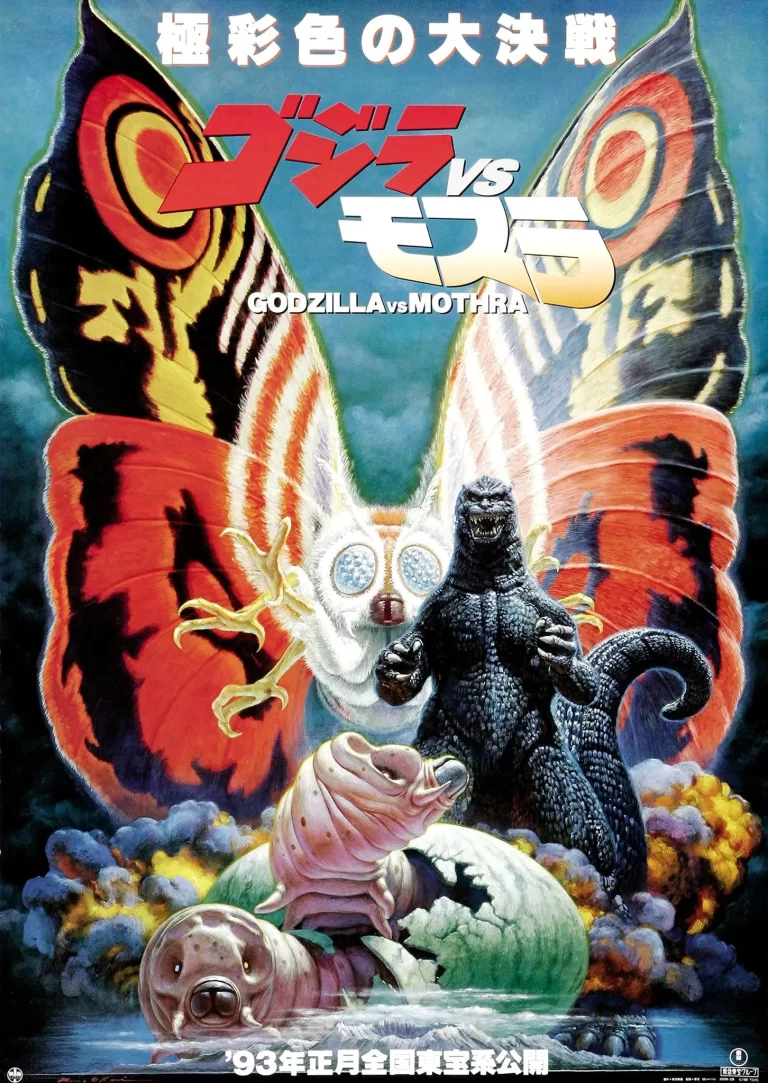

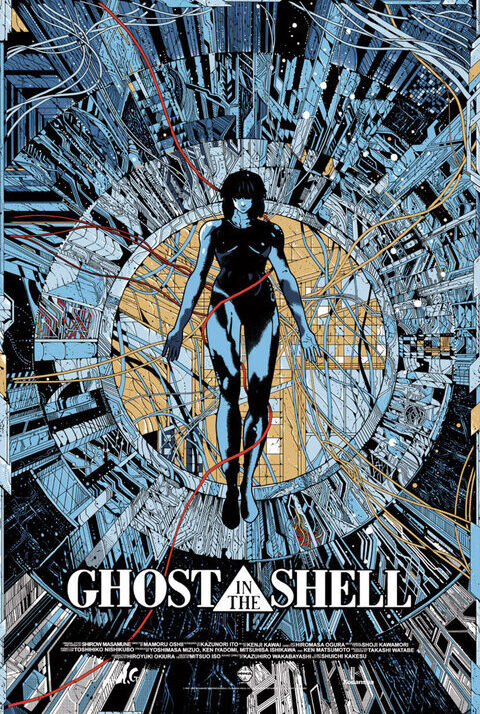

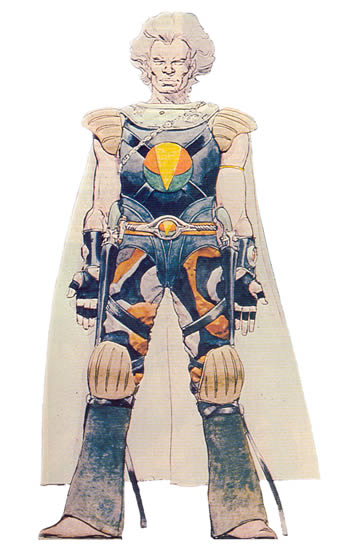

In addition to literary inspiration, my visual explorations using generative AI also heavily reference sci-fi cinema and animation, as per several examples below.

From (Top) Left: La Planète Sauvage (1973), Godzilla VS. Mothra (1992), Ghost In The Shell (1995), Concept art produced by Moebius/Jean Giraud for Jodorowsky’s Dune, a chronicle of Alejandro Jodorowsky’s quest to produce a live-action Dune film in the 1970s; the film sadly never made it past pre-production

Professor Gale stands in the poppyfield against the back-drop of the inter-dimensional probe, and a sister universe hovering in the sky

Professor Gale sends a Kaiju-like monster back to Earth after a threatening messenger warns her of danger awaiting her there

Professor Gale’s organic and mechanical schematics for blasting probes in-between the folds of the sister universes

A mysterious creature called “Galecrow” witnesses worlds collapsing in upon one another in the distant future of Oz.

Imaginary Worlds and Surreal Physics

There is a limit to how many parcels of information a model can process.

The prompt engineer has to creatively reduce the amount of text in the prompt down to the most essential details, or repeatedly paraphrase, until the model is able to successfully generate what the user intends.

This can become complex when the user is describing a phenomenon that is difficult to put into words. These generations represent that scenario, and the results of what I was able to achieve when I was challenged with visualizing a scene that was difficult to describe in understandable, parsable dialogue

From Top: (Image) Dorothy is transported through a wormhole of transformative green aether back to Oz;

(Animation) The dome world known as Oobliad spins in the dark plasma medium of its unique universe;

(Animation) Dorothy’s probe arrives deep within the Earth’s core in the distant future

Challenges

Unconventional Combinations

Green Skin (non-human skin tone) — I was not able to produce this by text alone; it required the use of photo reference.

Points of Reference

Resulting Combinations

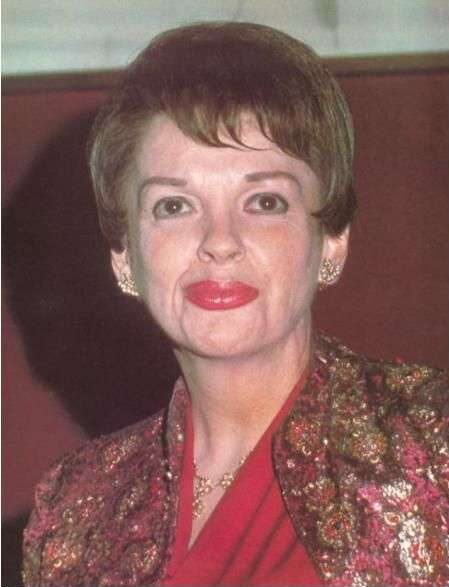

Top: Judy Garland (age 45) and a common garden ornament originating from UK folklore, the Green Woman, were used as references within the prompt

Bottom: A series of variations in the early stages of achieving the desired combination between skin tone and other formal qualities of appearance (gender, age, character reference)

Ageing — Achieving a natural impression of the various stages of ageing proved challenging; Midjourney had a tendency to return outputs that appeared too artificially attractive and young, despite the intentional use of age descriptors in the body of the prompt.

Top: Initial generations had a tendency to appear overly youthful despite the deliberate inclusion of descriptive language regarding age.

Middle: A variation eventually took on a naturally aged appearance, and so further variations were prompted from that root image

Bottom: A final image shows the character Glinda, an upscale created from a desired generation

Uncommon creature of fantasy — It took about 4-5 working sessions before I achieved the synthesis of elements I was looking for in the winged monkeys. Winged monkeys are not a common creature of fantasy in comparison to, say, a unicorn—a representation that likely appeared in abundance during the model’s training.

Other Observations

Model Strengths

Texture and Particle Systems — Particularly in the case of generative animation, the tools excelled at producing textural qualities and/or particle systems in motion, such as the green cloud formations found in the Cacophonous Apparition series

Style — Midjourney and Pikabot both excelled at representing styles and periods when precise vocabulary was deployed in the prompt

An example of a successful particle system (a cloudlike aether with a quality of movement superimposed)

Top/Left: Actress Julie Andrews runs to the top of a grassy hill with the Swiss Alps in the background in The Sound of Music (1965)

Bottom/Right: The prompt for this image of an adult Dorothy Gale running through a field of poppies made clear stylistic reference to The Sound of Music (1965)

Model Limitations

Uncanny Valley — Although this phenomenon is being actively addressed in recent product releases, the sense that human representations in generative-AI appear almost alien or inhuman has been a common critique of generative images and video. The image below is a more pronounced example of the model not quite getting it “right”. Earlier models were highly prone to adding additional extremities like fingers and limbs.

Inconsistent Characterization — The tools struggled to produce consistent characterizations across new prompts, even when using seed or image references; this challenge of consistency is progressively being addressed in newer iterations of models

Ozma, the Reclusive Queen of Oz (Midjourney)

Integrated Media

Future practical applications for generative image and animation could likely include the use of generative tools to produce elements of scenery that would normally be produced with modern day CGI.

Recent Advances in Generative Tech

Google’s Veo 3, has opened new creative doorways; the tool allows the integrated transition between vignettes in order to produce complex animated sequences with consistent characterization and a linking of the scene’s progression.

The above shortfilm was made entirely using Google’s new Flow tool. The tool integrates a creative user interface streamlined for filmmakers with the powerful generative Veo 3 animation model.

Project Conclusion

I learned to apply syntax and structure depending on the model being used to envision creative ideas

I practiced trial-and-error in the use of prose-based, cultural vocabulary-based and action-based language in my prompts

I developed a system of production:

- Achieve successful generation/combination through the use of structure, syntax, creative language and image references

- Produce variations of the most successful generations/combinations

- (For Images) Make precise corrections by re-prompting lassoed sections of the image

- (For Animations) Re-prompt many times using slightly varied sentence structures and syntax-based attributes (such as camera zoom and panning, degree/quantity of motion

I learned more deeply about generative AI: :how models are trained, how they function, identifying their strengths and limitations

© Copyright. Matthew Crans. 2025.